Research projects

Projects

- CrowdLogger 2.0 (2012–2015)

- CrowdLogging: Distributed, private, and anonymous Search Logging (2010–2012)

- Video retrieval (2012)

- Search task assistant (2012)

- Microsoft Research Internship (2012)

- How users search for books (2012)

- Book retrieval (2011)

- Investigating Searcher Frustration (2009–2010)

- Popularity Ranking using Query Logs (2009)

- Detecting Task Boundaries in Domain-Specific Query Logs (2008)

- Spell Correction using Domain-Specific Query Logs (2008)

- Query Log Mining (2008)

- Retrieving Document "Hot Spots" (2007–2008)

- Testing of Text Retrieval Test Collection Generation Algorithms (2007)

- Qauality Assessment using Language Processing (QALP) (2004–2007)

- Slicing Research (2004)

CrowdLogger 2.0

Fall 2011–2015Note: I've stopped maintaining CrowdLogger. Feel free to fork the CrwodLogger GitHub project.

We've been working to extend CrowdLogger into a platform for conducting Web interaction studies. CrowdLogger, available as a Chrome and Firefox extension, logs users' interactions with their browser and stores the data on users' computers—nothing is uploaded. Researchers can develop extensions to CrowdLogger, called apps (or CrowdLogger Remote Modules—CLRMS), which study participants can install within CrowdLogger. These apps can interact with a rich API, giving them access to user data and other useful resources, and they can, pending consent of the user, upload data to a server. The privacy mechanisms that were part of the original CrowdLogger (see below) are still there, but are now available as an API function.

Development of this new CrowdLogger has gone pretty well, thought not without its rough spots. As the codebase grows in complexity, we find that things break more often than before. In addition, Google has announced that Windows Chrome users will no longer be able to install extensions from outside of the Chrome Web store. We are thinking of some alternative ways to make CrowdLogger more available and more robust. If you have any thoughts or would like to contribute, shoot me an email!

If you want to give developing apps for CrowdLogger a try, see this tutorial.

Resources

- CrowdLogger website

- CrowdLogger code base

- CrowdLogger Google group

- CrowdLogger app development documentation

Publications/Talks

- H. Feild. "CrowdLogger: A platform for conducting remote web interaction studies," Invited talk at the University of North Carolina Chapel Hill, November 16, 2013, Chapel Hill, NC. [slides (pptx)] [slides (pdf)]

- H. Feild and J. Allan. "Using CrowdLogger for in situ information retrieval system evaluation," In the proceedings of the 2013 CIKM Workshop on Living Labs for Information Retrieval Evaluation (LivingLab'13), San Francisco, CA, p. 15–18, 2013. [ACM w/ free pdf] [code] [UMass CrowdLogger page] [slides (pptx)] [slides (pdf)]

- H. Feild. "CrowdLogger as a Community Platform for Searcher Behavior Experiments," DC-area Information Retrieval Experts (DIRE) workshop, 2012. [pptx] [pdf] [abstract]

CrowdLogging: Distributed, private, and anonymous Search Logging

Spring 2010–Fall 2011This joint work with James Allan and Joshua Glatt focuses on a system for collecting search logs in a distributed manner—storing the data on users' computers rather than in a central database. This gives each user full control over his or her search data. Mining jobs, or experiments as we call them, can be run on each user's computer over their data. Experiments produce search artifacts—pieces of information, which can be something like a query or a query pair. These artifacts are then encrypted and uploaded to a server via a bank of anonymizers. However, they encryption is special; it uses a secret sharing scheme, which only allows a particular artifact to be decrypted by the server if it has been uploaded by at least k different users. Using a secret sharing scheme is not new for search logging; Eytan Adar suggested using such a scheme in this paper. On the server side, we can aggregate the encrypted artifacts and decrypt the ones that have sufficient support (that is, the artifact has been uploaded by at least k distinct users) and then get counts for each unique artifact.

You can see a demo of a Javascript implementation of Shamir's Secret Sharing Scheme in action here. You can download it here.

Details about this system can be found in our SIGIR 2011 paper, CrowdLogging: Distributed, private, and anonymous search logging. We have implemented CrowdLogging into a system called CrowdLogger. The live version of CrowdLogger can be found here ; the open source code can be found here; and a Google group can be found here.

Publications

- H. Feild, J. Allan, and J. Glatt. "CrowdLogging: Distributed, Private, and Anonymous Search Logging," In the proceedings of the 34th International ACM Conference on Research and Development in Information Retrieval (SIGIR'11), July 24–28, 2011, Beijing, China. [ACM w/ free pdf] [slides (pdf)] [slides (pptx)]

Video retrieval

Summer 2012–PresentThis project focuses on leveraging OCR and automatic speech recognition output of videos to improve video retrieval. This is a small part of a much larger project. At this point, it is not very interesting from a research perspective.

(Top of page)Search Task Assistant

Spring–Summer 2012I developed a search task assistant (STA) as a Chrome (and soon to come Firefox) extension. Actually, it's mixed in with CrowdLogger 2.0 (not yet released). What is an STA? It organizes your search history into tasks using a simple classifier. As you conduct new web searches, the STA predicts whether you're starting a new task or picking up where you left off in an old one. It lets you browse, search, and modify your task history. Pretty cool!

Publications

- H. Feild and J. Allan. "Task-Aware Search Assistant (Demonstration)," In the Proceedings of the 35th International ACM Conference on Research and Development in Information Retrieval (SIGIR'12), Portland, Oregon, USA, p. 1015, 2012. [ACM w/ free pdf] [poster pdf]

Microsoft Research Internship Spring 2012

In the Spring of 2012, I had the opportunity to work with the incredibly talented and hard working Ryen White of MSR (as of January 2014, Ryen has seven best paper awards listed on his site!). Ryen had this idea that he wanted me to help design and run a study to test, something called clickable snippets.

Lets start with the motivation. How often do you find yourself in the following situation: you're on a search results page and you see a result with a seemingly perfect snippet, but when you visit the page you either a) can't find the corresponding piece or b) you eventually find it, but you had to resort to using Ctrl+F? I know I've certainly been there!

What if search result pages told you which pieces of a snippet were actually located on the landing page? And if you click a snippet, you were brought right to where that information lives on the landing page? That was the idea behind clickable snippets—snippets are clickable and clicking on one brings you to the relevant information on the landing page.

To test this, we ran a lab study with 48 participants. We had a set of preset queries and search result pages, and all the landing pages were cached. Participants generally preferred clickable snippets over several baseline systems (including thumbnail previews, which Google used to do), and were more efficient at completing search tasks. See the paper for more details.

Publications

- H. Feild, R. White, and X. Fu. "Supporting orientation during search result examination," In the proceedings of the ACM SIGCHI Conference on Human Factors in Computing Systems (CHI'13), Paris, France, p. 2999–3008, 2013. [ACM w/ free pdf]

How users search for books

Winter 2012Our group (CIIR) came into some Apache logs collected by the Open Library. The Open Library contains a community-curated library catalog that can be browsed and searched. You can even search over full text for books that have been scanned in. It includes pages for books, authors, subjects, and user-generated reading lists. A nice feature is that each book has links for: reading, borrowing, and buying.

My lab mates Jin Young Kim and Marc Cartright and I cooked the Apache log down to a halfway decent search log. We found that most book page visits originated from Google searches. The next most frequent were from Open Library searches, and the rest were from various external search sites, e.g., Bing, Yahoo!, Ask.com, and smaller search and library sites. So, we decided to break the data into "external search" (using only Google-origin search) and "internal search" (using only Open Library searches). The other sources were ignored.

Given this data, we analyzed search behavior for internal and external searches at the levels of: query, session, and user. Users were defined as all activity in the log tied to the same IP address on the same day. Session were identified by inserting a break every time two adjacent user events were more than 30 minutes apart. We found that external queries are substantially longer (4.2 words) than internal queries (2.3 words) and, unsurprisingly, external searchers are more likely to visit a single book page and then abandon the site.

We published a paper in CIKM 2012 (listed below), so check it out. Also, we are trying to release an evaluation set that includes book metadata, queries, and click frequencies. We are awaiting permission from the Internet Archive (who operates the Open Library).

Publications

- J.Y. Kim, H. Feild, and M. Cartright. "Understanding Book Search on the Web," In the proceedings of the 21st ACM Conference on Information and Knowledge Management (CIKM'12), Maui, Hawaii, USA, p. 744–753, 2012. [ACM w/ free pdf] [data set]

Book Retrieval

Summer 2011Working with James Allan and Marc Cartright, the three of us set out to investigate book retrieval. We first looked at the "Prove it" task in the INEX book retrieval track. The goal of "Prove it" is to find pages in a set of scanned books that either proves or refutes a given statement. An optional part of the task was to indicate explicitly whether the page was supportive, refutative, or a mix...we ignored that part. What we found was that combining passage retrieval and a sequential dependency model worked pretty well. We submitted and did very well (even if you ignore the fact that there were only two teams). You can find our write up below.

In trying to think of applications for book search, we decide to apply idea behind Prove it to a real-world situation: finding citations for Wikipedia assertions. Wikipedia is a great dataset for this because we know which statements are assertions (they have footnotes) and which are in need of citations (they have a [citation needed] footnote). In addition, there is plenty of context for each statement. We considered the paragraph, section, section title, and page title as sources of context. We selected a handful of citations from pages that looked like they were on topics covered by a set of 50k scanned, public domain books and commenced searching. We found that using a sequential dependency over the assertion and surrounding paragraph did very well. We also found a few interesting refutations, such as when George Washington was sworn into office. We submitted this to the CIKM BooksOnline workshop where it received the Best Workshop Paper Award.

Publications

- H. Feild, M. Cartright, and J. Allan. "The University of Massachusetts Amherst's participation in the INEX 2011 Prove It Track," In the Proceedings of the Initiative for the Evaluation of XML Retrieval workshop (INEX'2011), Saarland, Germany, p. 80–85, 2011. [pdf]

- M. Cartright, H. Feild, and J. Allan. "Evidence Finding using a Collection of Books," The proceedings of the International Conference on Information and Knowledge Management BooksOnline workshop (BooksOnline'11), Octobater 28, 2011, Glasgow, Scotland, UK. [ACM w/ free pdf] [presentation (pdf)] Best Workshop Paper Award!

Investigating Searcher Frustration

Spring 2009–Spring 2010One of the things I am interested in is understanding how information retrieval frustration (e.g., frustration during a Web search) can be modeled and how those models can be used to facilitate adaptive search systems that reduce user frustration. Many well-performing retrieval algorithms have developed over the years (e.g., explicit relevance feedback, query expansion and reduction, dependency modeling). However, many are not used because of the necessity of user interaction and/or high cost, both of which are usually unacceptable in a live search system. One hypothesis is that frustrated users—i.e., searchers who are having difficulty finding the information they are seeking—are more apt to use these alternative retrieval models. We then ask: when should what model be presented to the user and how?

My masters thesis explored the feasibility of predicting frustration during a search session. To do this, we conducted two user studies in which participants were asked to search for predefined information needs, or tasks, and we used three physical sensors to track facial expressions, pressure exerted on the mouse, and sitting position. In addition, we constructed a Firefox toolbar to track participants' interactions with the browser, including mouse movements, document views, and feedback about pages visited and the queries submitted to four major search engines. We also describe this work in this SIGIR 2010 paper. The data for this work can be found here.

While interning at Yahoo!, we started looking into how frustrated users could be helped. We explored an additional variable, search self-efficacy. This is how effective of a searcher users think they are. If you think you're a poor searcher, then you probably will not get frustrated with a retrieval system (you'll blame it on yourself). If you get frustrated with the search process, the kind of help you'll require may be simple, like a longer list of query suggestions. If you think you're an amazing searcher, you probably will get frustrated with the system and will be more likely (we hope) to use advanced search tools.

To explore tools to assist frustrated users, we conducted a 400-person study on Amazon Mechanical Turk. The results were inconclusive, but published on the search self-efficacy of Turkers and also filed a patent. See below.

Publications

- H. Feild and J. Allan. "Modeling Searcher Frustration," In the Proceedings of the Third Workshop on Human-Computer Interaction and Information Retrieval (HCIR'09), Washington DC, USA, p. 5–8, 2009. [pdf]

- H. Feild. "Exploring Searcher Frustration," Masters project, University of Massachusetts Amherst, December 2009. [pdf]

- H. Feild, J. Allan, and R. Jones. "Predicting Searcher Frustration," In the Proceedings of the 33rd International ACM Conference on Research and Development in Information Retrieval (SIGIR 2010), July 19–23, 2010, Geneva, Switzerland. [ACM w/ free pdf] [slides (pdf)]

- H. Feild, R. Jones, R.C. Miller, R. Nayak, E.F. Churchill, E. Velipasaoglu. "Logging the Search Self-Efficacy of Amazon Mechanical Turkers," In the Proceedings of the SIGIR 2010 Workshop on Crowdsourcing for Search Evaluation, July 24, 2010, Geneva, Switzerland. [pdf] [slides (pptx)]

- H. Feild, O.E. Velipasaoglu, B. Dumoulin, E.F. Churchill, R. Jones, J. Bardzell. "Systems And Methods for Providing Search Assistance Technologies Based on User Self-efficacy and Search Frustration", US Patent App. 12/978,261 (2010). [Google patent page]

Popularity Ranking using Query Logs

Spring–Summer 2009This is joint work with Bob Armstrong, James Allan, and Jeff Dalton. We are investigating learning-to-rank techniques for use with the medical publication search company that provides us with query logs. The high-level idea is that we would like to incorporate a document's click-though rate for a specific query into where that document is ranked in the results list. Ideally, the most popular documents will be placed closer to the top of the list.

(Top of page)Detecting Task Boundaries and Tasks in Query Logs

Fall 2008As part of an independent study, I implemented the task boundary detection work described by (Jones and Klinkner, 2007) for use on the medical publication search data used in my previous projects (see spelling correction and query log mining). Jones and Klinkner's work uses logistic regression to learn 1) when any two queries from the same search session are part of the same task and 2) when there is a boundary between two consecutive queries. Our study focused just on the first one.

In the context of this work, a task can be defined as a information goal or as an information mission. Goals contain one or more queries from the same search session, while missions contain one or more goals. However, a goal is not allowed to be in more than one mission.

In our study, we asked graduate students to label pairs of queries as belonging to the same goal and mission. We then used a maximum entropy classifier and ten-fold cross validation to learn the labels. After normalizing the features, we attained classification accuracies comparable to those used by Jones and Klinkner on Yahoo! data.

Going beyond previous work, we also used clustering techniques to create goal and mission groups using the pair-wise classifications from above. We then attempted to use these to detect when the user was frustrated (this makes the unfounded assumption that frustration is contained within a task).

This was interesting work, but a little ahead of its time with respect to the frustration detection. I plan to revisit this work in the future, once I've investigated more closely what searcher frustration is and how it can be automatically detected.

References

- R. Jones and K. L. Klinkner. "Beyond the session timeout: automatic hierarchical segmentation of search topics in query logs, in the Proceedings of CIKM, 2008.

Spell Correction using Medical Domain Query Logs

Fall 2008Building off of the exploration from the query log mining work, Xing Yi and I created a novel spelling correction algorithm that uses same-session reformulation information—similar to (Jones et a., 2006)—as well as language models—similar to (Cucerzan and Brill, 2004)—and trusted domain-specific lexicons.

References

- S. Cucerzan and E. Brill. "Spelling correction as an iterative process that exploits the collective knowledge of web users," In EMNLP, pages 293-300, 2004.

- R. Jones, B. Rey, O. Madani, and W. Greiner. "Generating query substitutions," In WWW, pages 387-396, 2006.

Query Log Mining

Summer 2008This line of work was more just implementation and data exploration. The implementation part had to do with writing code to use with TupleFlow, a kind of MapReduce framework developed by Trevor Strohman (now at Google). Marc Cartright, Elif Aktolga, and I wrote a Java package that uses TupleFlow to mine query logs. The query logs were from a medical document search company. We explored query reformulation, click-through data, and query similarity.

(Top of page)Retrieving Document "Hot Spots" (a.k.a. Passage Retrieval) Fall 2007 - Spring 2008

Between Fall 2007 and Spring 2008, I worked towards developing a search algorithm to find the `hot spots' within a document relative to a given query.

The goal of this research is to return to a user a ranked list of text passages extracted from documents in a corpus. The passages are ranked according to the retrieval system's belief that a passage is relevant to the user's information need.

To do this, I created an algorithm to calculate the Query-Likelihood score for each document and then distributed that score across the document wherever a query term occurred. As can be seen below, the Query-Likelihood language model calculates the probability of a query, Q (which is made of of one or more terms), given a document, D. P(Q|D) is the product of the probability of each term, q, in the query given the document. This is done using a maximum likelihood estimation (MLE) of term frequencies in the document smoothed with a background model of term frequencies in the collection.

Now, think of a document as a giant array where the indices refer to term positions within the document and the value is a score associated with that position. My method computes each P(q|D) and then assigns a fair portion of that probability to each position in the document array where q occurs. So, if P(q|D) = .6 and q occurs 2 times, then both indices in the array that refer to the positions where q occurs in the document get a score of .3.

Once these scores are distributed, they are smoothed by placing a Gaussian kernel on top of each position in the array that has a non-zero score. All interference between kernel smoothings is additive, so that if two query terms are near by, once they are smoothed out, there should be several indices between the two that have a higher score than if only one of the query terms was present.

After smoothing, each group of contiguous indices with a score above a threshold are considered to be a 'hot spot'. I experimented with various automatic thresholding methods, including local (the threshold used for each document is different) and global (the threshold is constant over all documents for a particular query) methods. These generally involved setting the threshold to some percentage of the max score in the document or for all documents.

Initial results using the Text REtrieval Conference (TREC) Genomics 2006 corpus were not very promising. Because of these poor results and some higher priority projects, my work with locating document hot spots has been shelved for the time being.

References

- O. de Krester and A. Moffat. "Effective Presentation with a Locality-Based Similarity Heuristic," In SIGIR, 1999, 113-120. [web]

Testing of Text Retrieval Test Collection Generation Algorithms

Summer 2007During the Summer of 2007, I was a part of the the Summer Undergraduate Research Fellowship (SURF) program at the National Institute of Standards and Technology (NIST). I spent my time working for the Text REtrieval Conference (TREC) group testing the effectiveness of the Minimal Test Collection algorithm developed at the University of Massachusetts Amherst by Carterette, Allan, & Sitaraman. This was one of the two algorithms used to evaluate retrieval systems in the TREC Million Query Track in 2007 and 2008.

(Top of page)Quality Assessment using Language Processing (QALP)

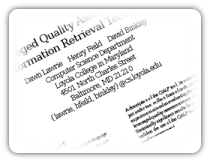

2004 - 2007The QALP team consists of:

QALP Scores I

Winter-Spring 2005Running off the idea that good code is accompanied by good comments, the QALP group put together a program to find the cosine similarity between code within a function and its comments. This similarity, referred to as a QALP Score, needed to be correlated to a quality rating for each function. To find a quality rating, a sample of functions were assembled into a survey, which was then given to undergraduate students. The functions in the survey were stripped of all comments and the students were asked to asses the each function's quality using a variety of criteria.

Identifier Splitting

Summer 2005 - Summer 2006While many identifiers make use of some sort of division marker (e.g. an underscore or camelCasing) to break up concepts within an identifier, many do not, or at least not completely. The QALP group's favorite example of this is thenewestone. This particular identifier should be the_newest_one. Imagine having to analyze source code where identifiers all looked like that and you would have to mentally 'split' them. Wouldn't it be easier if there was a program that would split them for you? We thought so, so we looked into two methods—a 'greedy' algorithm and a neural network. Turns out the greedy algorithm works better.

Sources

Identifier Makeup Study

The QALP group was curious to see what type of identifiers were mostly easily understood by programmers. Consider variations on a variable that references the index of an array; you might see i, idx, or index. A survey of 12 functions was assembled and put up on the net which asked those who took it to explain the purpose of each function. For each of the 12 functions, there were three versions—one with single-character abbreviated variables, one with multi-character abbreviated variables, and one with full, non-abbreviated variables. The user was randomly given one of the three versions for each function.

QALP Scores II

Summer 2006In an attempt to refine the study conducted in the Winter and Spring of 2005, this version upgraded the QALP Scoring process, scoring source code on a per-class basis.

In addition, instead of using a subjective measure of quality with which to correlate the QALP Score, this time defect rates were used.

(Top of page)Slicing Research

Summer 2004My first summer assisting with research consisted of me finding source code for use with Dr. Binkley's Amorphous Program Slicer. I spent my time familiarizing myself with Linux, running the program slicer on open source programs, and then graphing results.

(Top of page)